Invented by Thomas Pheiffer, Shun Miao, Rui Liao, Pavlo Dyban, Michael Suehling, Tommaso Mansi, Siemens Healthcare GmbH

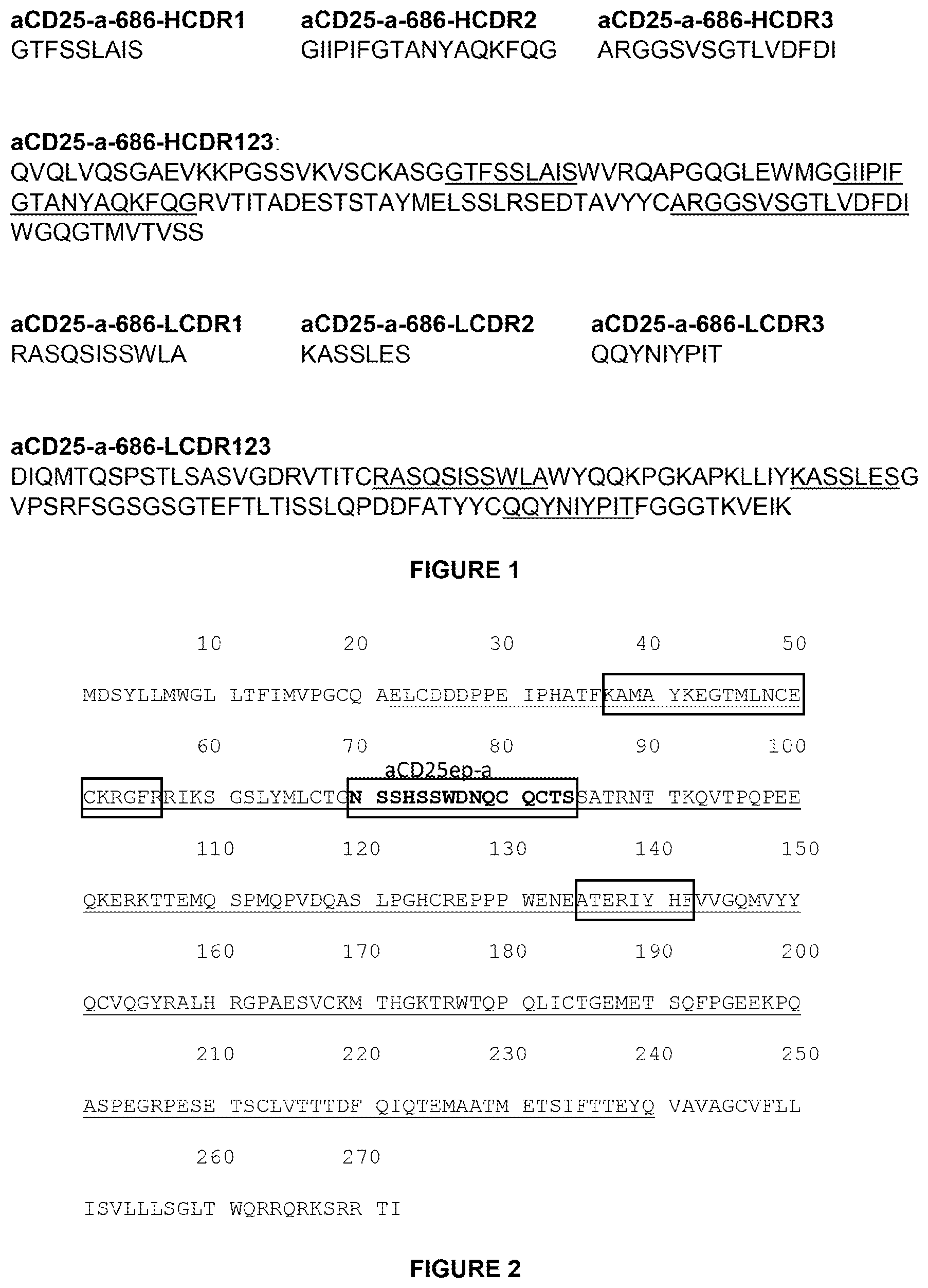

Automatic change detection refers to the process of analyzing medical images taken at different time points and identifying any changes or abnormalities that may have occurred. This technology utilizes sophisticated algorithms and machine learning techniques to compare images and highlight any differences, allowing healthcare professionals to quickly identify potential issues and take appropriate action.

One of the key drivers of this market is the rising prevalence of chronic diseases such as cancer, cardiovascular diseases, and neurological disorders. Regular monitoring and early detection of changes in medical images can significantly improve the chances of successful treatment and management of these conditions. Automatic change detection can help healthcare providers detect subtle changes that may not be easily noticeable to the human eye, enabling timely interventions and reducing the risk of complications.

Moreover, the increasing adoption of digital imaging technologies in healthcare facilities is fueling the demand for automatic change detection solutions. Digital imaging offers numerous advantages over traditional film-based imaging, including faster image acquisition, easier storage and retrieval, and the ability to enhance and manipulate images for better visualization. Automatic change detection algorithms can be seamlessly integrated into existing digital imaging systems, providing healthcare professionals with a powerful tool to aid in their diagnosis and treatment decisions.

Another factor driving the market growth is the need for efficient and cost-effective healthcare solutions. Manual review of medical images is time-consuming and prone to human error, especially when dealing with large volumes of data. Automatic change detection algorithms can significantly reduce the time and effort required for image analysis, allowing healthcare professionals to focus on patient care and treatment planning. Additionally, these solutions can help reduce healthcare costs by minimizing the need for unnecessary repeat imaging studies and enabling early intervention to prevent disease progression.

The market for automatic change detection of medical images is highly competitive, with several key players offering innovative solutions. These companies are continuously investing in research and development to enhance the accuracy and efficiency of their algorithms. They are also collaborating with healthcare providers and research institutions to gather real-world data and validate the performance of their solutions.

However, there are challenges that need to be addressed for the widespread adoption of automatic change detection technology. One of the major concerns is the potential for false positives or false negatives, which can lead to unnecessary interventions or missed diagnoses. Ongoing research and development efforts are focused on improving the accuracy and reliability of these algorithms to minimize such errors.

In conclusion, the market for automatic change detection of medical images is witnessing significant growth due to the increasing demand for accurate and efficient diagnosis. This technology has the potential to revolutionize healthcare by enabling early detection of diseases and conditions, improving patient outcomes, and reducing healthcare costs. With ongoing advancements in technology and continuous research efforts, the future looks promising for automatic change detection in medical imaging.

The Siemens Healthcare GmbH invention works as follows

Systems and Methods are provided for identifying changes in pathological images. Data on reference images is collected. Following up image data are acquired. A deformation field for the reference data and follow-up data is generated using a machine learned network that has been trained to create deformation fields that describe healthy, anatomical distortion between input reference data and input following up image data. The deformation field is used to align the reference image data with the follow-up image data. The co-aligned image data of the reference and follow-up images are analysed for changes caused by pathological phenomena.

Background for Automatic change detection of medical images

The assessment of longitudinal changes and image quality is a critical task for medical imaging techniques like computed tomography or magnetic resonance imaging. It is difficult to distinguish between pathological and normal changes when analyzing medical images. “For example, a follow-up scan of the lung or another organ of a patients may mask pathological changes, such as cancerous growth or shrinkage.

The large number of normal changes can make it difficult to detect pathological changes on CT or MRI images taken at multiple time points. Manually detecting normal vs. pathological changes can be difficult and error-prone. The use of computer-assisted registration can improve the objectivity and accuracy of results. Image registration can be divided into rigid and non-rigid groups. Non-rigid registration is also called deformable registration. In rigid image register (RIR), pixels are moved and/or rotated uniformly, so that the pixel-to pixel relationship is maintained before and after transformation. DIR changes the pixel to pixel relationship in order to simulate a nonlinear deformation.

RIR works well in cases where no deformation or anatomical change is expected. Some patients, however, may experience anatomical changes as a result of weight loss, tumor shrinkage and/or physiological organ shapes. RIR may not be able to handle the changes. DIR is more flexible than RIR. DIR can handle local distortions between two images sets (e.g. Anatomical changes. Mathematical modeling is used to calculate the statistics of movement or deformation for organs that are being considered in DIR. Segmentation maps contours from reference images to updated images using the information. DIR can detect and use landmarks anatomical to register images. However, the methods do not differentiate between pathological and normal changes in anatomical structures. For example, the DIR may suppress a tumor growth in a subsequent image if it is too strong. DIR, a computer-assisted tool, may not be accurate due to the normal anatomical changes that are represented in images.

By way or introduction, the preferred embodiments are described below. They include embodiments that detect pathological changes on medical images acquired over two or more time intervals. A machine-learned system assists in aligning the reference and follow up image following a biomechanical predicate. The aligned images of the reference and follow-up images are then analyzed to determine pathological changes that can be presented to the operator.

In the first aspect, it is possible to identify pathological changes on follow-up medical images. The first image data are acquired. The following image data is obtained at a later time. A machine-learned neural network is trained to create deformation fields that describe healthy anatomical deformations between input reference data and input following up image data. The deformation field is used to align the reference image data with the follow-up image data. The co-aligned image data of the reference and follow up images are analysed for changes caused by pathological phenomena.

In the second aspect, it is described a method for training a neuronal network to create a deformation field between two volumes: a reference and a follow-up volume. The acquisition of multiple pairs of reference volumes and following up volumes. The multiple pairs of volumes is segmented. The segmented pair is converted into a plurality mesh surfaces. The mesh surfaces are matched by using point-wise correspondences. The biomechanical motion for the matched surfaces is solved using finite element methods. The motion and mesh surfaces are used to generate a deformation mesh for a pair of volumes. The paired volume set is input into a neural network that outputs a physiological deformation. Comparing the deformation mesh with the physiological deformation. The neural network is adjusted to take into account the comparison. The process of generating, entering, comparing and adjusting is repeated until the neural networks outputs a deformation field similar to that deformation field.

In a third aspect of the invention, a system for identifying pathological differences in medical images taken for follow-up for a patient is presented. The system includes an image processor and a machine-learned network. The machine-learned system is configured to create a physiological deformation between a reference and follow up image. The image processor warps the follow-up image as a result of the physiological deformation fields; the processor is further configured identify a difference between the warped following up image and reference image.

The following claims define the invention. Nothing in this section should be construed as limiting those claims. Additional aspects and benefits of the invention will be discussed below, in conjunction with the preferred embodiments. They may be claimed later independently or together.

Pathological differences can be detected automatically and highlighted on medical images such as computed tomography, magnetic resonance imaging (MRI), or other modalities. A machine-learned network aligns medical imaging follow-up data with medical imaging reference data automatically in a manner that reduces or removes normal physiological and anatomical differences. This allows the differences caused by pathology to be highlighted.

A biomechanical modeling is created that identifies normal anatomical differences and physiological differences. The biomechanical model is used to train the neural network in order to produce deformations fields based on input volume data. Deformation fields are used for aligning the reference volume data and the follow-up volume data. The alignment allows for the identification of pathological differences in both data sets. “The pathological differences can be highlighted on an image or presented in another way to an operator.

The approach could, for instance, reduce the amount of time doctors spend reading lung scans, by increasing the rate at which tumor changes are detected early. This approach can be used to compare images of reference and follow-up for patients who are participating in lung screening programmes. Examples of lung scans are to detect diffuse changes or cancerous nodules in the lungs, as well as to highlight growth or shrinkage. The approach can be used in a variety of imaging situations such as different imaging modalities, like CT, MRI or ultrasound, and/or other anatomy, such a liver, breast, prostate or other diagnoses.

FIG. “FIG. A patient or object 110 may be placed on a table that can be moved by a motorized system to different positions in the CT imaging device 100. The CT imaging system includes an X-ray (or other radiation) source 140 and detector elements 150 that are designed to rotate around the object 110 when the subject is in the circular opening 130. This rotation can be combined with the movement of the bed in order to scan along the longitudinal extent of a patient. The gantry can also move the source 150 and detector 140 in a helical pattern around the patient. A single rotation in a CT system 100 may take less than one second. During rotation of the X ray source and/or detector the X ray source produces a narrow fan-shaped beam (or cone-shaped beam) of X rays which pass through a targeted portion of the body 110 of the subject being imaged. The detector element(s), 150 (e.g. multi-ring detectors), are located opposite the X ray source 140. They register the X rays passing through the body and create an image. The X-ray 140 and/or the detector element(s), 150, are rotated to collect many different angles of snapshots through the subject. The image data collected by the snapshots is transmitted to the control unit, which stores or processes it into one or more cross-sectional volumes or images of the interior of the body of the subject being imaged by the CT imaging device 100.

When capturing CT data over time (e.g. different imaging appointments that occur hours, days or weeks apart), a problem arises when comparing different sets of data. Objects, e.g. Patients change over time. They grow and shrink, gain or lose mass, alter their shape, etc. While scanning the lungs for instance, breathing motions or other movements may cause image alignment to be distorted. Images can be aligned rigidly to detect some image changes. This alignment, however, does not represent changes e.g. Organ deformation, weight loss in the patient, anatomical movements, or shrinkage. DIR may be required to accurately assess imaging information in order to resolve anatomical movements. DIR is used to find the mapping between two images. DIR is considered an important tool for accurate longitudinal mapping (e.g. lung) because of the anatomical changes that occur during treatment or over time, and the differences in breathing states from one image. lung).

FIGS. The two images of the lungs from a patient, 2A and 2B, were taken at two different times. FIG. FIG. 2A shows an initial CT reference image. FIG. FIG.2B shows a follow-up CT image. The follow-up CT image shows both normal and pathological changes compared to that of the reference CT. Some tissues might have grown or boundaries may have changed. RIR might not be able register the two images because of deformations in the scan area. DIR can register two images, but it may also change the pathological changes which could lead to a wrong diagnosis. DIR, for example, may shrink a tumour as the DIR algorithm does not consider pathological changes. The operator viewing the image could not understand the size of the tumour due to the incorrect registration.

An image to image network can be used to register pathological changes while minimising distortion due to anatomical differences. Image-to-image network disclosures can be used to improve and optimize medical diagnostics by facilitating the processing of medical images. The detection and highlighting of pathological changes within medical images can increase efficiency and resource usage. A doctor or operator may spend less time diagnosing a medical condition on a patient. A better understanding of pathological changes could lead to a more accurate diagnosis of medical conditions. A better diagnosis can lead to improved outcomes in medicine. “The image-to-image system provides a technical registration solution to improve the diagnostic quality of patient medical imaging.

FIG. “FIG. The CT data is aligned biomechanically using a machine-learned neural network, followed by the highlighting of relevant pathological phenomenon. The machine-learned neural network is a pre-trained artificial neural network which is trained on sets of image-pairs (reference-and-follow-up) that have been aligned with a biomechanical model. It produces a deformation for each pair. The machine-learned neural network creates a deformation field that is used to align new image pairs. This deformation field minimizes deformations due to anatomical and motion differences while maintaining pathological differences. The deformation field can be used to align the new images. The residual differences in the images after co-alignment can be analyzed, and then used to inform further image processing.

The system in FIG. 1, FIG. 4, FIG. 7. Other systems, workstations, computers, or servers. The acts can be different, additional, or fewer. The acts may be performed in any order (e.g. from top to bottom).

At Act A110, the first reference CT data are acquired by a medical image device. CT data can be acquired by a medical imaging system. The CT data can be converted into images, or they may be used as imaging data. Medical imaging data is used to create an image. The medical imaging device makes the data, images or imaging data available. The acquisition can also be from memory or storage, for example, a dataset created previously from a Picture Archiving and Communication System (PACS). The data can be extracted from a picture communication system or medical records database by a processor.

The CT data represents a two-dimensional cut or a volume in three dimensions of the patient.” The CT data can represent an area of the patient in pixel values. Another example is that the CT data can represent a three-dimensional volume of voxels. The three-dimensional image may be displayed as a stack of planes, slices or two-dimensional planes. “Values are provided in each of the multiple locations that are distributed in two or even three dimensions.

The data can be in any format. The terms “image” and “imaging” are used interchangeably, but the data or image may exist in a different format than the actual image. Imaging data can be, for example, a collection of scalars representing different locations using a Cartesian coordinate format or polar format that is different from a display format. Another example is a set of red, green and blue (e.g. RGB) values that are output to the display in order to generate the image. Imaging data can be a currently displayed or previously-displayed image on the display, or in another format. Imaging data can be a dataset used for imaging such as scan data, or a generated picture representing the patient.

Any type medical imaging data or corresponding medical scanner can be used.” In one embodiment, imaging data comprises a computed-tomography (CT), image acquired using a CT system. A chest CT dataset, for example, may be obtained by scanning the lungs. The output image can be a two-dimensional slice. The raw data from the CT detector is then reconstructed to a three dimensional representation. Another example is the acquisition of magnetic resonance (MR), which represents a patient, using an MR system. The data are acquired by using an imaging sequence to scan a patient. The data is obtained in K-space, which represents an interior area of a human. The data is reconstructed using Fourier analysis from k-space to a three-dimensional image or object space. Data may include ultrasound data. A transducer array and beamformers are used to scan the patient acoustically. The acoustic signal is beamformed, and then detected to produce polar coordinate data that represents the patient.

Click here to view the patent on Google Patents.